- WEBSCRAPER OUT OF SELENIUM INSTALL

- WEBSCRAPER OUT OF SELENIUM UPDATE

- WEBSCRAPER OUT OF SELENIUM UPGRADE

- WEBSCRAPER OUT OF SELENIUM CODE

WEBSCRAPER OUT OF SELENIUM INSTALL

& apt-get install -y google-chrome-unstable fonts-ipafont-gothic fonts-wqy-zenhei fonts-thai-tlwg fonts-kacst ttf-freefont \ & sh -c 'echo "deb stable main" > /etc/apt//google.list' \

WEBSCRAPER OUT OF SELENIUM UPDATE

RUN apt-get update & apt-get install -y wget -no-install-recommends \

WEBSCRAPER OUT OF SELENIUM UPGRADE

RUN apt-get update & apt-get -yq upgrade & apt-get install \ Secondly is this a nice blogpost about running Docker containers on Azure Container Instances. The Google Chrome teams has made a nice Docker file with some tricks applied, I basically copied that. Here’s one that works for the Puppeteer scraper. Building a docker container requires a dockerfile. We want to containerize the application inside a docker container. The next thing is to host it in the Cloud. Well you’ve got your scraper working on Node using TypeScript. Notice the “Launch program” configuration inside the debug panel of VS Code. This can be achieved by adding the following configuration in launch.json. In VSCode you’ll have to add a debug configuration. One thing you probably want to do is to debug your code.

Evaluate the page and interact with it. A very basic example is this: 1Ĭonst browser = await puppeteer.launch() It’s all up to you to interact with the page and retrieve the right information. Once you’ve installed your dependencies you can start developing your scraper. You should install them as a dev-dependency instead of a regular dependency. These are required to have type checking. 1Ī lot of packages got separate TypeScript definition packages. You probably need some packages to interface with Puppeteer, Azure storage or whatever. You are now ready to start developing your TypeScript application. Once you’ve setup the TypeScript configuration its time to setup a NPM project.

WEBSCRAPER OUT OF SELENIUM CODE

This allows you to debug your TypeScript code instead of debugging the transpiled JavaScript (which is a mess). Once important thing is to enable source maps. Message TS6071: Successfully created a tsconfig.json file.Ī sample of how your TypeScript configuration file might look like is this. Depedenciesįirst of all get TypeScript tsconfig.json file there using the following command. So here’s the story to get a puppeteer scraper working in NodeJs and TypeScript. The last time I did something significant I was still using Visual Studio instead of VS Code for TypeScript development. I wanted to brush up my TypeScript and NodeJS skills since it has been a while that I seriously developed in TypeScript. They are pay per usage and are easy to develop! Puppeteer, TypeScript and NodeJs Logic Apps are great for defining and running workflows and look like a perfect fit. But then on a bright day I figured I would use Azure Logic Apps instead.

A good start point for running puppeteer containers in Azure is this blog post.įor orchestrating the scraper I was thinking about using Azure Functions again. I can spin up and tear down the container with some orchestration and thereby limit my costs. So what are the alternatives? Well containers seems like a reasonable solution. My initial idea was to run puppeteer inside an Azure Function, however after some research I came to the conclusion that running a headless browser on Azure PaaS or Serverless is not going to happen.

A headless Chrome API build by Google itself, very promising. After some research I stumbled upon `puppeteer`. Some are build as an SPA and that requires per definition a browser based approach. Also because lots of ecommerce sites rely on alot on JavaScript. Since I want to scrape different ecommerce sites spinning up an actual browser looked like the way to go. Others spin up and entire (headless) browser and perform actual DOM operations.

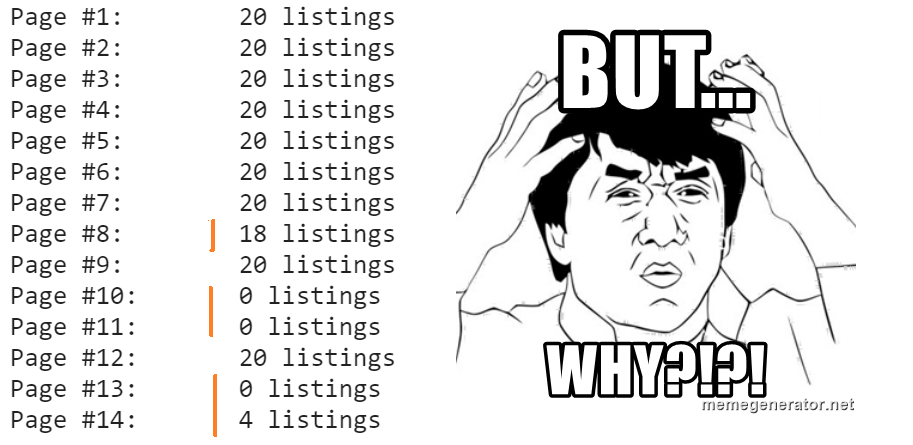

Some packages just perform Http calls and evaluate the response. Web scraping comes in different shapes and sizes. Later on I will have to develop some UI which discloses the information for ecommerce traders. Lastly the output of the scraper has to be stored in a database. I’m scraping ecommerce sites and the pages that need to be scraped depend on a list of id’s comming from a database. This frequency might change in the future so I don’t want to have it build in hard coded. I want to scrape certain websites twice a day. Secondly I only want to pay for actual usage and not for a VM thats idle. I don’t want to pay for a VM and just deploy the scraper on it because I need the solution to be scalable. Utilizing Serverless and PaaS services is challenging.

0 kommentar(er)

0 kommentar(er)